This is a recent, elegant and upsetting PNAS paper:

Science faculty’s subtle gender biases favor male students

Corinne A. Moss-Racusin, John F. Dovidio, Victoria L. Brescoll, Mark J. Grahama, and Jo Handelsman

Abstract: Despite efforts to recruit and retain more women, a stark gender disparity persists within academic science. Abundant research has demonstrated gender bias in many demographic groups, but has yet to experimentally investigate whether science faculty exhibit a bias against female students that could contribute to the gender disparity in academic science. In a randomized double-blind study (n = 127), science faculty from research-intensive universities rated the application materials of a student—who was randomly assigned either a male or female name—for a laboratory manager position. Faculty participants rated the male applicant as significantly more competent and hireable than the (identical) female applicant. These participants also selected a higher starting salary and offered more career mentoring to the male applicant. The gender of the faculty participants did not affect responses, such that female and male faculty were equally likely to exhibit bias against the female student. Mediation analyses indicated that the female student was less likely to be hired because she was viewed as less competent. We also assessed faculty participants’ preexisting subtle bias against women using a standard instrument and found that preexisting subtle bias against women played a moder- ating role, such that subtle bias against women was associated with less support for the female student, but was unrelated to reactions to the male student. These results suggest that interventions addressing faculty gender bias might advance the goal of increasing the participation of women in science.

The authors construct a fake job application for a hypothetical undergraduate student who is applying to work as a scientific technician/lab manager in a laboratory (this is a common stepping-stone to entering a doctoral program and becoming a PhD researcher). The authors randomly assign a male or female name to the applicant and distribute the application to principle investigators (PhD scientists who run real labs), asking them to score the applicant on a variety of metrics such as "competence" and "hireability." The only difference between applications is the gender of the student. The results are unambiguous:

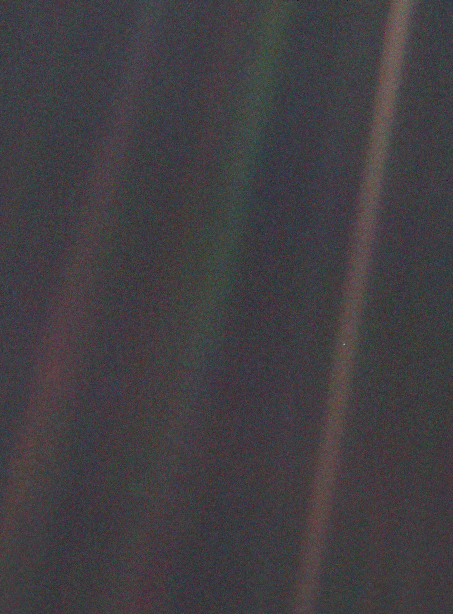

|

| Click to enlarge |

It is possible that one could construct an explanation for why researchers would mentor male students more, without invoking sexism -- eg. perhaps if the scientist believes a female student is more likely to leave the field, they will feel like there is less personal reward for spending time mentoring female students. But (and this is where I congratulate the authors for a well-designed experiment) there is no way that differences in "competence" scores can be explained without sexism.

The authors then ask these principle investigators how much they would be willing to pay the applicant to work in their lab:

|

| Click to enlarge |

The authors write:

Finally, using a previously validated scale, we also measured how much faculty participants liked the student (see SI Materials and Methods). In keeping with a large body of literature, faculty participants reported liking the female (mean = 4.35, SD = 0.93) more than the male student [(mean = 3.91, SD = 0.1.08), t(125) = −2.44, < 0.05]. However, consistent with this previous literature, liking the female student more than the male student did not translate into positive perceptions of her composite competence or material outcomes in the form of a job offer, an equitable salary, or valuable career mentoring.

Every teacher, research and mentor in the sciences should read the paper (

open access here) and do some soul-searching, asking themselves if they consciously or subconsciously discriminate against female students, employees in the lab or colleagues. In addition, I also think we all have the responsibility to keep one another honest and to make one another aware of situations and decisions when we might mistakenly judge our students, employees or peers based on their sex and not on their scientific merit.

Overall, this paper is carefully designed and convincing with writing that is thoughtful and readable.

Just a few comments before proceeding:

- The title of the paper describes the results as a "subtle" bias. But as my fiance (a PhD) points out, if effects of this size were found in any other context, they would held up as "big" effects. I think the use of the word "subtle" is a bit confusing, since I think the authors are referring to the bias being subconscious, rather than referring to the magnitude of the bias (which is not subtle).

- Even if these biases are subconscious, they are still sexist. I understand why the authors don't use this language in a published paper, but in discussing these results in the context of our own conduct, it seems important to not shy away from what is really going on. Describing these results as a subconscious bias, rather than sexism, may make them seem more excusable.

- The central contribution of the paper is to simply point out what is going on. Once we are aware of our own biases, especially if they are subconscious, we can make a conscious effort to correct them. But in addition to each of us reflecting on our own actions, there are some easy institutional mechanisms that can be developed to help us avoid these biases. For example, universities could make it easy for scientists to receive job applications through an electronic system that automatically double-blinds the applicant. (Many peer-reviewed journals do this when sending papers out to referees.) Unfortunately, it is harder to protect students and researchers in day-to-day activities, so if sexist treatment persists even after the hiring process, this will be harder to address. But much more certainly could be done. For example, it should be standard that an oversight committee anonymously surveys students and employees regularly to determine if there is statistical evidence of discrimination within departments or individuals laboratories (which tend to behave a bit like small fiefdoms, with little to no oversight of the principle investigator's behavior towards students/employees). The NSF and various funding agencies frequently award money to labs for "teaching and mentoring," so they should make these anonymous evaluations and their analysis (or something similar) a requirement for this funding.

The authors are careful to check whether sexism is a strictly male phenomenon (i.e. men faculty discriminating against female students). They do this by constructing this table:

|

| Click to enlarge |

The authors find that both male and female mentors exhibit sexism. But the authors do not push the data as far as they could as they make no statements about whether the bias of male mentors is larger or smaller than that of female mentors. The authors write:

In support of hypothesis B, faculty gender did not affect bias (Table 1). Tests of simple effects (all d < 0.33) indicated that female faculty participants did not rate the female student as more competent [t(62) = 0.06, P = 0.95] or hireable [t(62) = 0.41, P = 0.69] than did male faculty. Female faculty also did not offer more mentoring [t(62) = 0.29, P = 0.77] or a higher salary [t(61) = 1.14, P = 0.26] to the female student than did their male colleagues. In addition, faculty participants’ scientific field, age, and tenure status had no effect (all P < 0.53). Thus, the bias appears pervasive among faculty and is not limited to a certain demographic subgroup.

And later in the discussion:

Our results revealed that both male and female faculty judged a female student to be less competent and less worthy of being hired than an identical male student, and also offered her a smaller starting salary and less career mentoring. Although the differences in ratings may be perceived as modest, the effect sizes were all moderate to large (d = 0.60–0.75). Thus, the current results suggest that subtle gender bias is important to address because it could translate into large real-world dis- advantages in the judgment and treatment of female science students (39). Moreover, our mediation findings shed light on the processes responsible for this bias, suggesting that the female student was less likely to be hired than the male student because she was perceived as less competent. Additionally, moderation results indicated that faculty participants’ preexisting subtle bias against women undermined their perceptions and treatment of the female (but not the male) student, further suggesting that chronic subtle biases may harm women within academic science. Use of a randomized controlled design and established practices from audit study methodology support the ecological validity and educational implications of our findings (SI Materials and Methods).

It is noteworthy that female faculty members were just as likely as their male colleagues to favor the male student. The fact that faculty members’ bias was independent of their gender, scientific discipline, age, and tenure status suggests that it is likely un- intentional, generated from widespread cultural stereotypes rather than a conscious intention to harm women (17). Additionally, the fact that faculty participants reported liking the fe- male more than the male student further underscores the point that our results likely do not reflect faculty members’ overt hostility toward women. Instead, despite expressing warmth to- ward emerging female scientists, faculty members of both genders appear to be affected by enduring cultural stereotypes about women’s lack of science competence that translate into biases in student evaluation and mentoring.

Now here is a bit that I am adding. Looking at Table 1, it seemed like the bias for female mentors was larger, but it was hard to tell based on the layout of the table (you have to hold the differences in your head since they aren't written down). This caught my attention because its an issue that was raised by Anne Marie-Slaughter's

recent Atlantic article, which I had discussed extensively with family and friends.

So I copied the data from Table 1 into Excel, reorganized it and explicitly compared the magnitude of the bias based on the gender of the faculty-mentor. In the first two panels, the column "difference" is the magnitude of the bias in favor of male students. In the bottom panel, I compare these biases and take their difference (a difference-in-differences) to see whether male or female mentors are more biased (a positive number means female faculty are more biased). The last column lists how much larger the bias is for female faculty relative to male faculty.

Both male and female faculty exhibit sexism. But across all four measures, female faculty exhibit a larger bias then male faculty (I don't have the raw data, so I can't know if the difference is statistically significant in this sample -- but, the direction of the bias is clearly consistent across measures). Without any evidence that the male student has more merit, the female faculty member is on average 15% more biased when it comes to evaluating whether the applicant is competent; and the female faculty member offers the male student an additional $920 in salary on top of the $3,400 extra that the male faculty offered him. Now, I am

not trying to point the finger at women faculty to distract from the fact that male faculty are sexist. Discrimination by either group is unacceptable. I am simply trying to highlight one additional point that was skipped over in the original analysis and which

Anne Marie-Slaughter argues is an important (but under-discussed) obstacle for professional women.

The findings of this study are important, and they indicate that all of us, men and women alike, should take a cold hard look at our own decisions, behaviors and tendencies. Sexism of this magnitude and scale, among some of the most highly educated members of society is unacceptable. If you observe a colleague who treats their male and female students differently, or if you see that they run a lab full of happy young men and miserable young women, take them aside and ask them what is going on. It is not easy to call a colleague out on these things, but they would probably rather hear it from you than an internal review board -- and more importantly, we owe it to our students and employees who work hard for us and look up to their mentors for education, guidance and leadership.

It is morally indefensible that sexism of this magnitude persists in our scientific communities and that the young women who are discriminated against suffer at the hands of their teachers and mentors. Moreover, we

all lose out every time that a talented young woman, who would have made scientific discoveries benefitting the world, leaves science because of discrimination.